Sometimes the urge to write on specific topics dawns on me, out of nowhere, often at night because that is my most productive time, this is one of those occasions and also another stream-of-consciousness piece. I often favor them but hold it because I feel lazy writing them.

Akin to homework to prepare people for my Language-centered piece, I recommended readers interested in the coming article to go watch a specific animated movie. This is also the case here, and while this is the first time recommended this animation on Substack, I yearly recommended it on Twitter. I will not spoil the big reveal of said animation, but it falls within current themes.

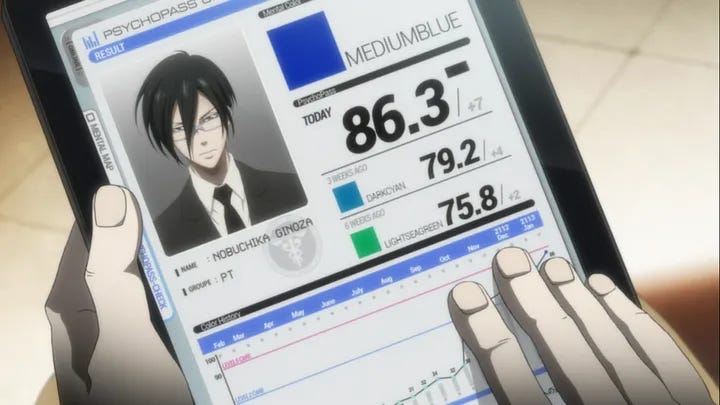

The opening of the animation. Forewarning, it is not an animated movie but an animated series, so it is long and it will take you a few days, or weeks to go through. Worth every second.

The word Psycho-Pass is also an interplay on how the Japanese pronounce and enunciate foreign languages, especially English words. The main meaning will be explained below, but the play with words can also mean “psychopath”.

The central theme of the anime is a dystopic future where crime grew rampant globally, famine, civil unrest, resource shortages, and wars ever increasing, but one country in the world was able to reverse these trends and “fix” itself, it became as close to paradise on Earth as one could. Almost crimeless.

Japan developed and put to use an advanced AI system (I told you it was central to our times) called the Sybil System. It created a different form of a surveillance state, and the main use of the Sybil System is the measurement of the Psycho-Pass, a numerical value that represents a person’s mental state and its criminal potential. It does so by reading a “cymatic scan”, the brain waves and brain activity of every individual that its cameras can register.

The Psycho-Pass is affected by various factors, such as stress, trauma, emotions, environment, and social interactions. It attributes each person to a score named “Crime Coefficient” and a hue to your brain wave readings. A hue too cloudy or dirt, or a score upwards of 100 and 299 and you get State mandated mental health and drug treatment, the closer to 299 the higher the likelihood of the person becoming target for preemptive apprehension.

Scoring above 300, a hue too dark or mudded and you are a target for outright elimination, this is where the crime prevention aspect comes in, clearly inspired by Minority Report.

A secondary use to your Psycho-Pass, the Sibyl System assigns people to different careers, so after graduating High School, the system will judge you and determine your entire life. The obvious comparison between the fictional world and ours is the Credit Score System in China.

As anyone paying attention to almost anywhere, AI development has been skyrocketing. Until recently changes in the field were measured in years or months, changes are now measured in just weeks. A new application is published daily, and the entire field from academia to applied usage is going through exponential growth. Some of the uses uncovered by researchers possess a deeper and more significant impact on society than others.

BEYOND MEMORIZATION: VIOLATING PRIVACY VIA INFERENCE WITH LARGE LANGUAGE MODELS

Everything covered in the publication above was already doable at certain levels before, but never to this extent, so widespread, and so easy to scale. Here the authors demonstrate how using public data (it doesn’t matter the source, Reddit, Twitter, Facebook, any social media and beyond), all currently available Large Language Models (LLM) can infer income, gender, address, age, etc. The only difference is the accuracy rate of each LLM, but every single one does it very well.

It takes very little tweaking to build a complete profile, including psychological analysis by using these models. I have long argued that most people leave too much information, and too much data, willingly on social media, and even if social media companies have already been doing aspects and levels of what is described above, now it will become much easier.

Especially since the recent algorithmic change on all social media, which is solely based on sentiment analysis and engagement. An endless cycle of emotionally driven, overly divisive, and aggressive “content production”. In our analogy here, instead of punishing criminals, social media especially Twitter now rewards them. But this type of AI-enabled analysis isn’t enough to trace parallels with Psycho-Pass.

This new technology from Meta is.

Towards a Real-Time Decoding of Images from Brain Activity

At every moment of every day, our brains meticulously sculpt a wealth of sensory signals into meaningful representations of the world around us. Yet how this continuous process actually works remains poorly understood.

Today, Meta is announcing an important milestone in the pursuit of that fundamental question. Using magnetoencephalography (MEG), a non-invasive neuroimaging technique in which thousands of brain activity measurements are taken per second, we showcase an AI system capable of decoding the unfolding of visual representations in the brain with an unprecedented temporal resolution.

This AI system can be deployed in real time to reconstruct, from brain activity, the images perceived and processed by the brain at each instant. This opens up an important avenue to help the scientific community understand how images are represented in the brain, and then used as foundations of human intelligence. Longer term, it may also provide a stepping stone toward non-invasive brain-computer interfaces in a clinical setting that could help people who, after suffering a brain lesion, have lost their ability to speak.

Leveraging our recent architecture trained to decode speech perception from MEG signals, we develop a three-part system consisting of an image encoder, a brain encoder, and an image decoder. The image encoder builds a rich set of representations of the image independently of the brain. The brain encoder then learns to align MEG signals to these image embeddings. Finally, the image decoder generates a plausible image given these brain representations.

While the generated images remain imperfect, the results suggest that the reconstructed image preserves a rich set of high-level features, such as object categories. However, the AI system often generates inaccurate low-level features by misplacing or mis-orienting some objects in the generated images. In particular, using the Natural Scene Dataset, we show that images generated from MEG decoding remain less precise than the decoding obtained with fMRI, a comparably slow-paced but spatially precise neuroimaging technique.

The dual use of AI is very explicit, from synthetic biology to surveillance, but no glaring issue is bigger than the ones presented here, both for the potential for absolute privacy eradication. While decoding images from the brain have many strides to go, especially if it were to be weaponized (you should bear in mind, for now, this is a private technology, so governments would have some difficulty exploiting it), using LLMs to infer large amounts of personal information is within the reach of anyone.

With the current levels of polarization, engagement-driven social media that financially rewards the one who goes most viral, regardless of the quality of information, among a myriad of other problems is a breeding ground for deploying, training, and achieving success with these tools.

All social media sites and their decaying algorithms now attribute scores to your posts, to your behavior, and your engagement and reach are solely based on that score. Unlike the hyper-utilitarian fictional society in Psycho-Pass, here you are rewarded the worse your behavior is.

Every single time you are manipulated via your emotional responses towards a social media post, you are in fact being engaged and targeted by algorithms and systems you are not even aware. And for the record, regulating Large Language Models and advanced AI will put these capabilities and much more in the hands of a handful of companies and the government only…

FYI Twitter is now a cesspool of automated content and engagement farming, with hundreds of verified bots infesting the platform.

the intro to your article made me think of Bhutan - seen several docs about this small country, with its majestic nature, conservation, friendliness - sounded indeed like paradise on earth.

I'm expecting massive waves of automated AI identity spoofing attacks soon. What sort of remote authentication is robust enough to withstand profiling and real-time voice/video generation? Even if you use a password or electronic authenticator, most institutions are vulnerable via a "password reset" backdoor. Even if 99.5% of these attacks are thwarted, the ability to automate it will make it incredibly destructive.