How to end AI safety and burn 80 billion dollars in 1 day

Effective destructionism

Events are still unfolding as of right now, but some of the dust has been settled enough for me to write some about it. I tend to be matter-of-fact and cite as many sources as I can, to give proper context and backing, but this will be a mixed piece.

OpenAI is a Mission-driven company, with the highest AI and Machine Learning talent pool in the world. Mission-driven companies are very unique compared to other companies, and in this particular case, it sets them off more. Most OAI employees could easily make a lot of money elsewhere, they stay in the company because they believe in the mission, and in the capacity of its leadership to deliver them to that goal.

Employees from Mission-driven companies tend to have high respect for uniquely positioned, and highly skilled CEOs, especially ones regarded as “good people”. Sam Altman is regarded as one of the best “good people” in SV, willing to help almost anyone.

The major problem, one that I pointed out multiple times is the fact that the upper echelon of OpenAI (basically its entire Board of Directors) is religiously invested in Effective Altruism and AI Safety. Within a few minutes of reading this piece, you will understand how this was the defining variable in the events that unfolded.

In case you didn’t pay any attention to social media, or in fact any news site on the planet in the last 60 hours, the CEO of the leading AI company in the world was summarily fired without any prior notice, communication, or press release. It will go down as one of the most short-sighted and worst moves in corporate history.

In the same call, the other co-founder of the company was removed from his position but kept in the company. Shortly after Greg Brockman quit, he didn’t agree with the decision of the board especially how they did (On a Google Meet call, and quite literally just telling Sam he was fired).

ChatGPT4 is the culmination of 10 years of engineering, the efforts of hundreds of people, roughly 100 billion dollars in cost, but it only worked because of Greg. He solved a problem they had for months.

There has been much speculation on the reasons why he was fired without any prior notice, motive, or information, when board members were publicly pressed, and privately by employees, they got no answer. In another corporate historical move, Satya Nadella, Microsoft’s CEO sent akin to a public letter, the text as follows:

We remain committed to our partnership with OpenAI and have confidence in our product roadmap, our ability to continue to innovate with everything we announced at Microsoft Ignite, and in continuing to support our customers and partners. We look forward to getting to know Emmett Shear and OAI's new leadership team and working with them. And we’re extremely excited to share the news that Sam Altman and Greg Brockman, together with colleagues, will be joining Microsoft to lead a new advanced AI research team. We look forward to moving quickly to provide them with the resources needed for their success.

In simpler words “Whatever you tried to do, didn’t work, whoever you appointed as new CEO, we don’t care, the guys you just fired ? Now have unlimited money to develop anything, and poach all your employees. Get fucked.”

A historical lesson on how to destroy a 100 billion company, and the most important AI lab on the planet, in one single move.

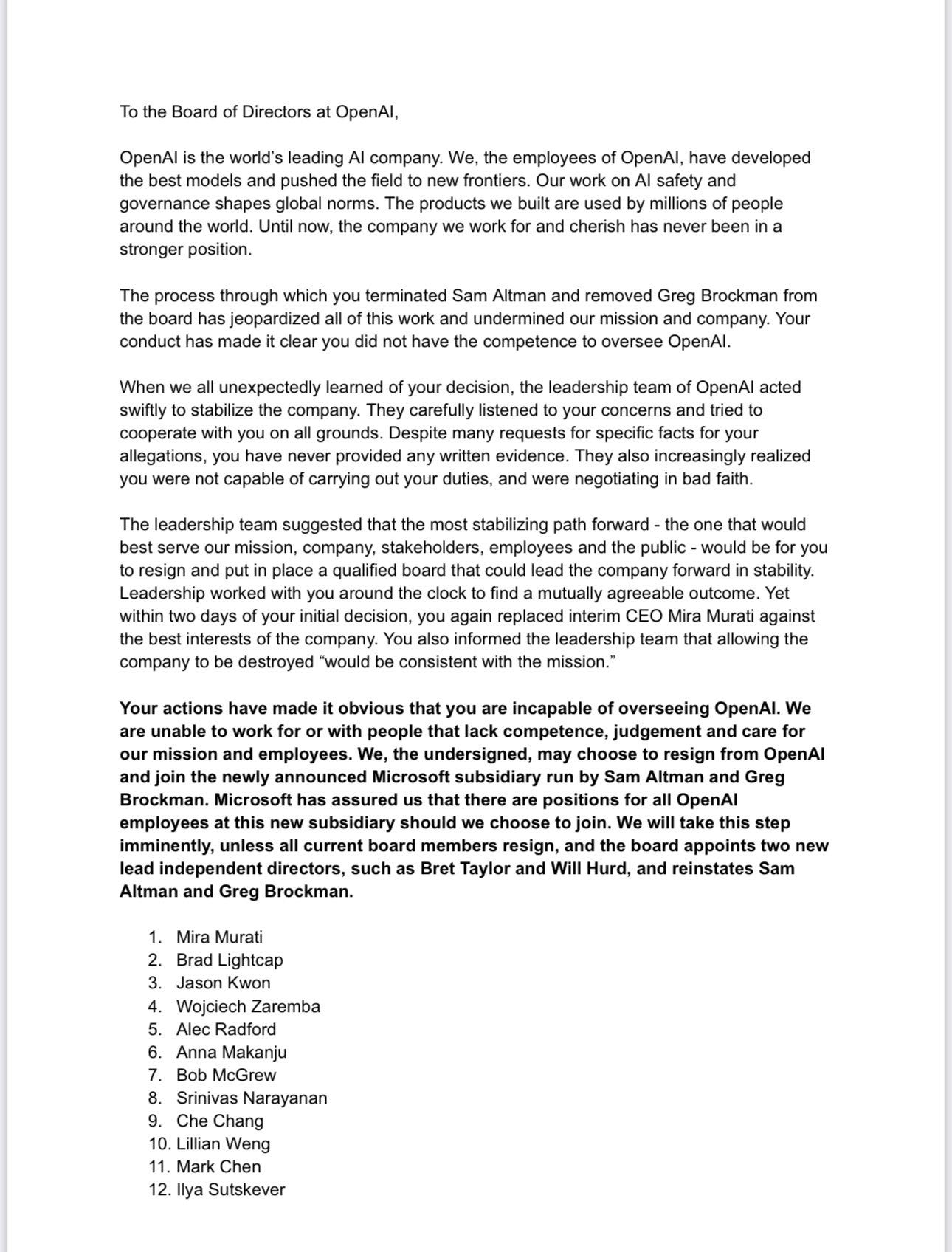

Now, why do I name Effective Altruism as the driving force in this attempted coup ? Because 700+ employees of OpenAI signed a letter telling the world this was a “safety coup”. EA’s destroyed the world’s leading AI company out of fear of AI, something their cult of doom has been parroting for years now.

The section in the letter “Allowing the company to be destroyed “would be consistent with the mission”.

The board members were not comfortable with the speed, the implementation of features, and the safety of the powerful models Sam was deploying. If you are lost on so many twists and turns asking yourself how Microsoft could pull this off is simple. ChatGPT4 may be amazing and maybe the leading LLM at the moment, but it requires an absurd amount of computing power.

Scaling this fast would never be possible without partnering with someone, and part of MSFT in OpenAI was in “Azure credits”. Azure is an MSFT cloud computing ecosystem, it is a strategic way to have an indirect say over OpenAI, and it is a smart way to both rent GPU (computing power) and exert indirect control over the company.

Contrary to popular belief, MSFT has no rights to any of the tech developed by OpenAI, especially, what they want most, is the weights. Weights per their name, are how language models ascribe importance to specific words and information, it is how the model learns and knows how to respond (very simplified manner). Acquiring the weights or the entire staff of OpenAI would enable MSFT to accelerate the development of GPT4 or more capable models at an absurd pace.

95% of the OpenAI workforce is now threatening to quit if the board doesn’t quit. Microsoft may end up acquiring OAI for 0 dollars.

The board members who fired Sam are Tasha McCauley, a big Effective Altruism donor and believer in the cult, Adam D’Angelo, Quora’s CEO, who has a product that directly competes with OAI, Ilya Sutskever, co-founder, one of the leading AI researchers but also one of the loudest voices in AI safety. The last board member is Helen Toner. From the link “Being involved in the EA community can be stressful — there are too many problems in the world, and each of us can only do so much.”, Helen is also a Georgetown alumnus, aka Spy School.

Center for Security and Emerging Technology deserves an article on its own since it gave and gives a roadmap with the intention the AI safety people have in mind, as a double-edged sword. These are not brilliant people, so most of their white papers usually telegraph their intention, the purported dangers are often intentions.

Now to the point of this article. Effective Altruists are a destructive force, incapable of understanding complex situations, and even less capable of understanding how short-term decisions may impact the world in the long term, it is a hallmark of their behavioral traits.

EA will willfully burndown an 80 billion dollar value company, and attempt to destroy or derail the development of (positive) world-changing tech out of unfounded, poorly thought-out fear. They are a national security risk like no other. Any person with a tech company, especially an AI company should get rid of any EA element.

And now these morons achieved the exact opposite of their intentions, killing any real intent of many researchers into safety and pouring fire into development, becoming effective accelerators. Before all of this mess, the UK following France and Germany's suit, decided to stop attempts to regulate AI in the short term. Zuckerberg did the same amid the chaos, silently, stealthily.

A fight for free, open-source AI is a fight for the future of humanity. Steps toward the right direction have been made, the future looks promising and so does MSFT's positioning. And as just a little bit of fun, if you wondered where Microsoft stands in regards to AI, safety, and slowing down. (Screen capture from a interview 30 minutes ago)

Thank you for your support !

Professor - Excellent sleuthing. "A fight for free, open-source AI is a fight for the future of humanity."

This demon spawns from the depths of hell - Oxford - funded in part by WEF & Musk. They share a bloody office together with CEA, and Toner is on staff. The name of this org? Future of Humanity Institute, also funded by The Future Of Life Institute - oh the irony of it all Mr. Mulder.

https://www.fhi.ox.ac.uk/ai-governance/govai-2019-annual-report/#team

https://en.wikipedia.org/wiki/Future_of_Humanity_Institute

https://en.wikipedia.org/wiki/Centre_for_Effective_Altruism

https://en.wikipedia.org/wiki/Future_of_Life_Institute

To understand my paradoxical positioning on AI safety, when a tild between being vehemently opposed, and being pissed of someone accelerate the hell out of it is complicated, but simple.

Years ago, in my former "professional" life, one of my persona goals, which I invested a lot of company resources in, was AI safety and disruption. Now I find myself in the polar opposite position of wanting to accelerate it, so everyone has equal access and almost equal capabilities. This moment will inevitably birth some problems down the road, but it is overall mostly positive.